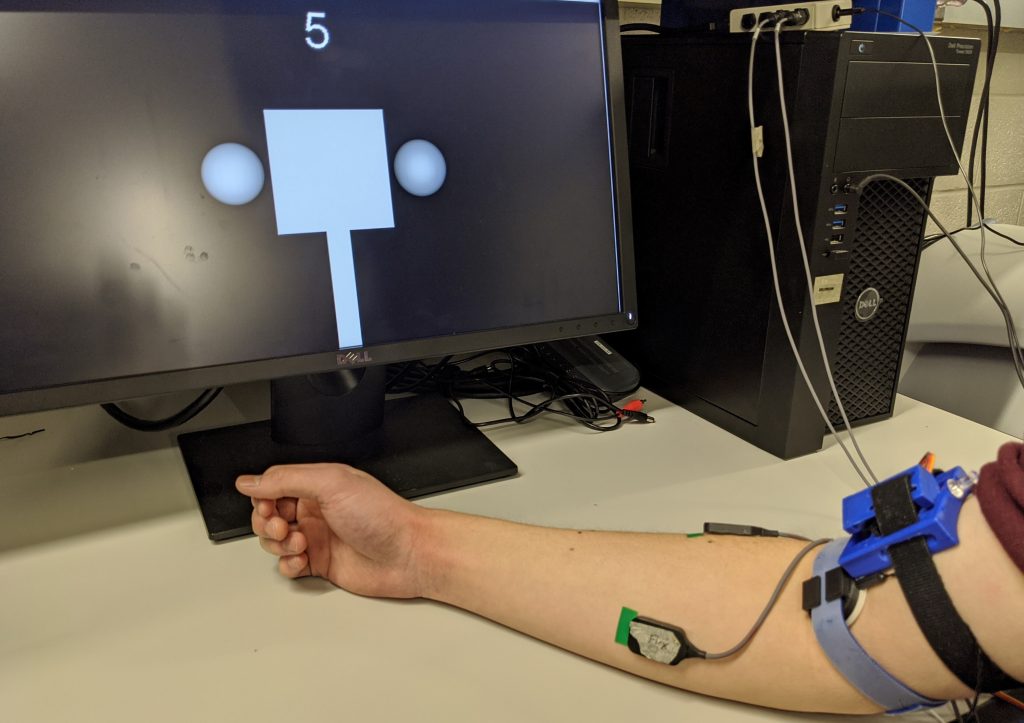

The study of human-machine interactions is extremely useful in designing and improving the human experience in both real and virtual environments. Rendering a desired haptic experience through a device; however, can be challenging due to the complexity physical sensing mechanisms of the human body and the complex cognitive system involved in understanding haptic perception; compounded by the effect from other sensing modalities. This research is primarily focused on studying the psychophysics involved in human interaction to better understand how the human brain can be used in the control loop of haptic devices to either compensate for inertia of mechanical systems and/or simulation of required inertia to create a life-like experience.

When we want a cup of coffee, our need to drink is at the forefront of our consciousness. On a subconscious level, our brain handles the tasks of moving our hand to the cup, grasping the cup, lifting the cup, and bringing it toward our mouth.

When we want a cup of coffee, our need to drink is at the forefront of our consciousness. On a subconscious level, our brain handles the tasks of moving our hand to the cup, grasping the cup, lifting the cup, and bringing it toward our mouth.

To accomplish this feat, the brain constantly monitors the position of the hand (our proprioceptive sense), the contact between the hand and the cup (our cutaneous sense), and the force with which we grip the cup (our kinesthetic sense). If the cup is almost full or if there is moisture on the outside of the cup or our hand, we instinctively grip the cup more firmly. Likewise, we can feel the dynamic movement of the liquid in the cup and adjust our limb movement to keep the liquid from spilling. These three senses, proprioceptive, cutaneous, and kinesthetic, comprise our haptic sense, or as it is more commonly known, our sense of touch.

Our sense of touch is vital to most tasks involving physical interaction with the world around us. Imagine trying to drink the cup of coffee with you hands and fingers anesthetized. Yet, there are situations in which the sense of touch is not available. For many of these, haptic feedback technologies hold the potential to restore the sense of touch.

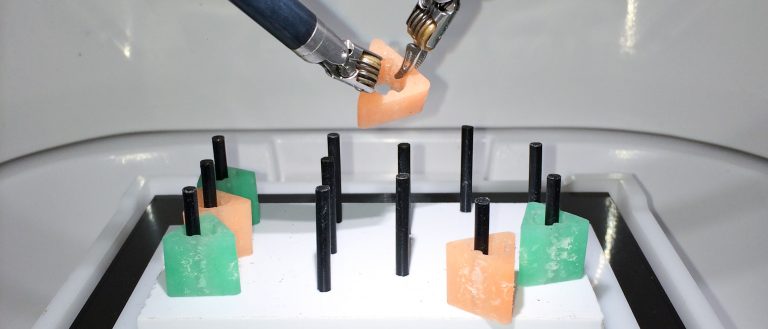

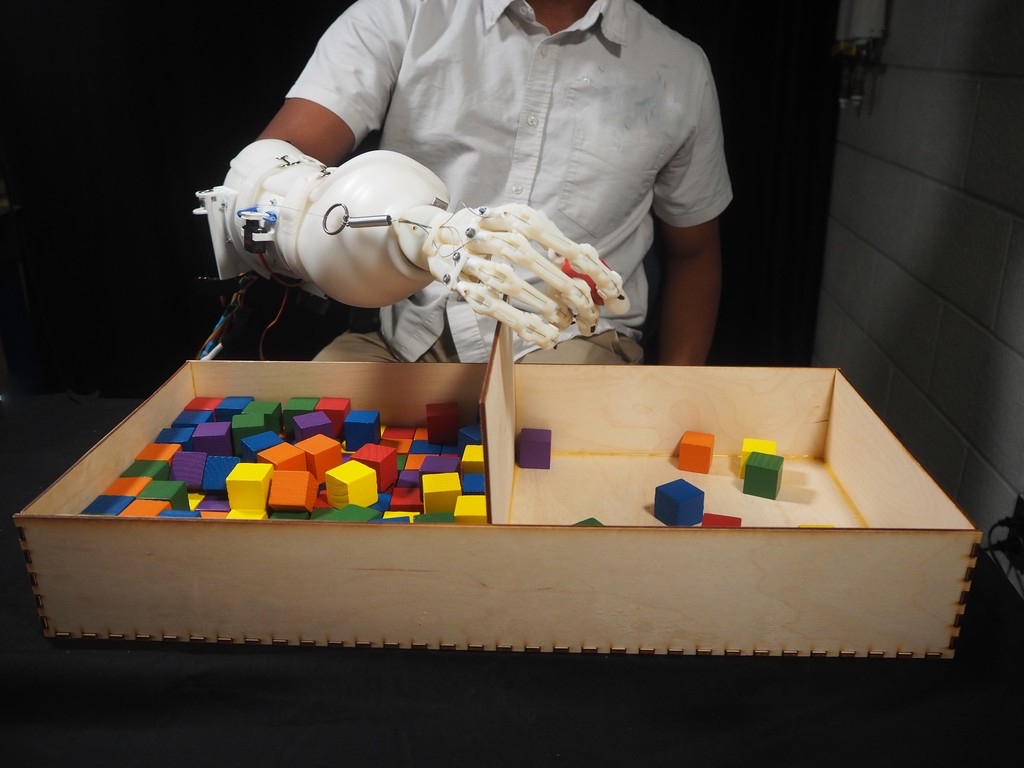

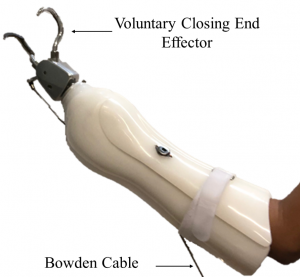

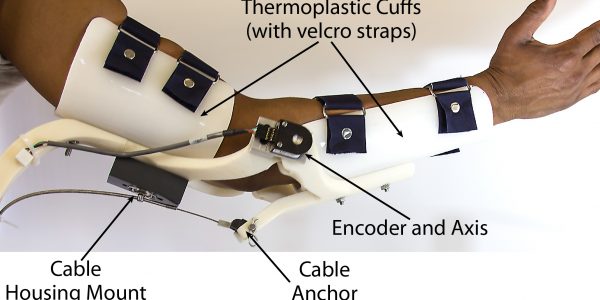

Two situations in particular that are the focus of our research are the application of haptic feedback technologies to upper-limb prostheses and minimally invasive surgical robots.